5 Best Big Data Databases

Features, Benefits, Pricing

With 11 years in big data services, ScienceSoft assists companies with selecting and implementing proper software for their big data initiatives.

Big Data Databases: the Essence

Big data is multi-source, massive-volume data of different nature (structured, semi-structured, and unstructured) that requires a special approach to storage and processing.

The distinctive feature of big data databases is the absence of rigid schemas and the ability to store petabytes of data. NoSQL (non-relational) database systems are optimized for big data. They are built on a horizontal architecture and enable quick and cost-effective processing of large data volumes and multiple concurrent queries.

Even though non-relational databases have proved to be better for high-performance and agile processing of data at scale, such solutions as Amazon Redshift and Azure Synapse Analytics are now optimized for querying massive data sets, which makes them sufficient when dealing with big data.

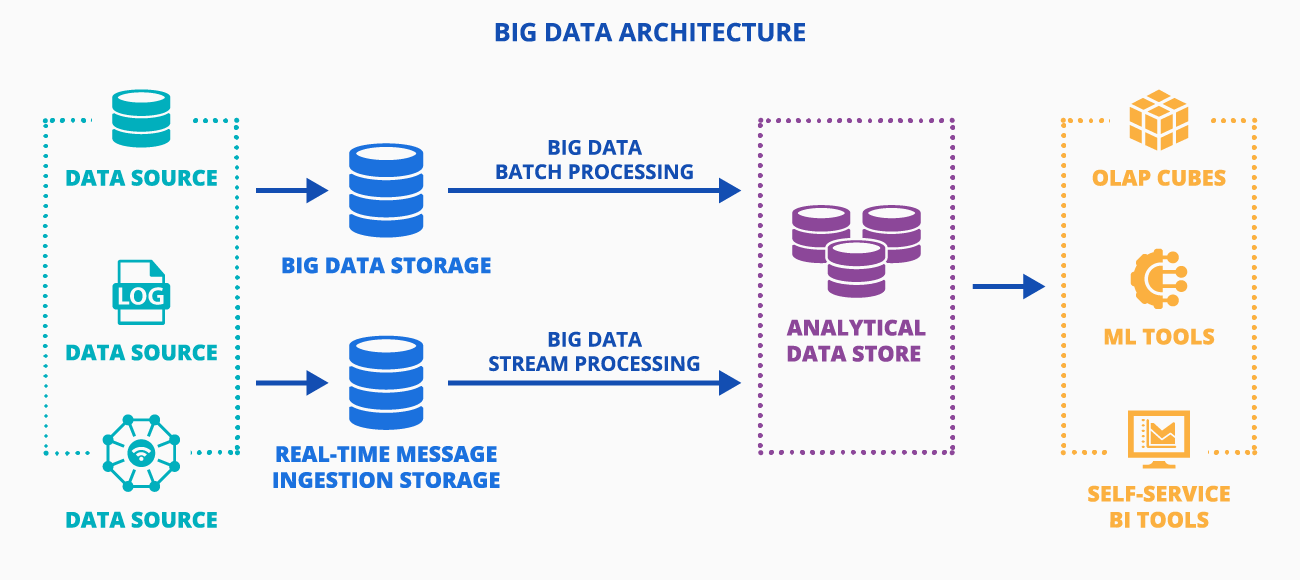

Big Data Architecture and the Place of Big Data Databases in It

Big data architecture may include the following components:

- Data sources – relational databases, files (e.g., web server log files) produced by applications, real-time data produced by IoT devices.

- Big data storage – NoSQL databases for storing high data volumes of different types before filtering, aggregating and preparing data for analysis.

- Real-time message ingestion store – to capture and store real-time messages for stream processing.

- Analytical data store – relational databases for preparing and structuring big data for further analytical querying.

- Big data analytics and reporting, which may include OLAP cubes, ML tools, self-service BI tools, etc. – to provide big data insights to end users.

Features of Big Data Databases

Data storage

- Storing petabytes of data.

- Storing unstructured, semi-structured and structured data.

- Distributed schema-agnostic big data storage.

Data model options

- Key-value.

- Document-oriented.

- Graph.

- Wide-column store.

- Multi-model.

Data querying

- Support for multiple concurrent queries.

- Batch and streaming/real-time big data loading/processing.

- Support for analytical workloads.

Database performance

- Horizontal scaling for elastic resource setup and provisioning.

- Automatic big data replication across multiple servers for minimized latency and strong availability (up to 99.99%).

- On-demand and provisioned capacity modes.

- Automated deleting of expired data from tables.

Database security and reliability

- Big data encryption in transit and at rest.

- User authorization and authentication.

- Continuous and on-demand backup and restore.

- Point-in-time restore.

- Compliance with national, regional, and industry-specific regulations GDPR (for the EU), PDPL (for Saudi Arabia), HIPAA (for the healthcare industry).

Best Big Data Databases for Comparison

According to the Forrester Wave report, leading solutions for data analytics and processing include MongoDB, Google AlloyDB, Amazon DynamoDB, Azure Cosmos DB, and Google BigQuery. Having proven expertise in market-leading techs, ScienceSoft is a technology-neutral vendor, and our choice of the optimal toolset is based on the value it will bring in each case.

Below, our experts provide a comparison of several big data databases ScienceSoft uses in its projects.

AWS DynamoDB

Description

A fully managed NoSQL database recognized as a Leader in the Forrester Wave report. It delivers single-digit millisecond performance at virtually any scale.

-

Key-value and document data models

-

ACID transactions

-

Microsecond latency with DynamoDB Accelerator (DAX)

-

DynamoDB Streams for real-time data processing

-

Integration with AWS services (S3, EMR, Redshift)

-

On-demand and provisioned capacity modes

-

End-to-end encryption and point-in-time recovery

Best for

Operational workloads, IoT, mobile and social apps, gaming, ecommerce platforms.

Pricing

US East region, Standard table class:

-

On-demand: ~$1.25 per million write request units (WRUs), ~$0.25 per million read request units (RRUs)

-

Provisioned: ~$0.00065 per write capacity unit (WCU)-hour, ~$0.00013 per read capacity unit (RCU)-hour

-

Storage: first 25 GB/month free, ~$0.25 per GB-month thereafter

Azure Cosmos DB

Description

A multi-model database recognized as a Leader in the Forrester Wave report.

-

Support for multiple APIs (SQL, MongoDB, Cassandra, Gremlin, Table)

-

Real-time analytics with Azure Synapse Link (no ETL required)

-

99.999% availability SLAs

-

ACID transactions

-

On-demand (serverless) and provisioned throughput modes

-

Advanced security: encryption in transit/at rest, fine-grained access control

Best for

Ecommerce, gaming, IoT, and operations-intensive applications requiring global scale.

Pricing

US East region:

-

Provisioned throughput: from ~$0.008 per hour per 100 RU/s (manual) or ~$0.012 (autoscale)

-

Serverless: ~$0.25 per 1 million request units (RU)

-

Storage: ~$0.25 per GB-month

Amazon Keyspaces

Description

Managed Apache Cassandra-compatible database service.

-

Supports Apache CQL API and Cassandra drivers/tools

-

On-demand and provisioned capacity modes

-

Encryption in transit and at rest

-

Continuous backup with point-in-time recovery

-

99.99% regional availability

-

Integrated with AWS CloudWatch and IAM

Best for

Fleet management, industrial IoT, and high-availability workloads requiring Cassandra compatibility.

Pricing

US East region:

-

On-demand: ~$1.45 per million write request units, ~$0.29 per million read request units

-

Provisioned: ~$0.00075 per WCU-hour, ~$0.00015 per RCU-hour

-

Storage: ~$0.30 per GB-month

Amazon DocumentDB

Description

A fully managed database service with MongoDB API compatibility.

-

Supports ACID transactions

-

Migration support with AWS Database Migration Service

-

Built-in roles and role-based access control

-

Network isolation and automated failover

-

Cluster snapshots and point-in-time restore

Best for

User profiles, catalogs, content management, and other document-centric apps.

Pricing

US East region:

-

On-demand: ~$0.27 – $8.86 per instance-hour (depending on instance size)

-

I/O requests: ~$0.20 per 1 million requests

-

Storage: ~$0.10 per GB-month

-

Backup storage: ~$0.021 per GB-month

Amazon Redshift

Description

Recognized in Gartner’s 2025 evaluation of cloud databases for analytics for its strength across event analytics, enterprise data warehouse, and lakehouse workloads.

-

SQL-based querying at petabyte scale

-

Spectrum for querying data in S3

-

Federated queries for operational data

-

Automated infrastructure provisioning and scaling

-

Row- and column-level security

-

Encryption and network isolation by default

Best for

Business intelligence, reporting, and large-scale analytics. Not designed for low-latency OLTP.

Pricing

US East region:

-

On-demand: ~$0.25/hour (dc2.large) – ~$13.04/hour (ra3.16xlarge)

-

Reserved instances: save up to 75%

-

Managed storage (RA3 node types): ~$0.024 per GB-month

What Big Data Database Suits Your Needs?

There is no one-size-fits-all big data database. Please share your data nature, database usage, performance, and security requirements. ScienceSoft's big data experts will recommend a database that is best for your specific case.

Thank you for your request!

We will analyze your case and get back to you within a business day to share a ballpark estimate.

In the meantime, would you like to learn more about ScienceSoft?

- Project success no matter what: learn how we make good on our mission.

- 35 years in data management and analytics: check what we do.

- 4,000 successful projects: explore our portfolio.

- 1,300+ incredible clients: read what they say.

Big Data Database Implementation by ScienceSoft

Since 1989 in software engineering, we rely on our mature project management practices to drive projects to their goals regardless of arising challenges, be they related to time and budget constraints or changing requirements.

Big data consulting

We offer:

- Big data storage, processing, and analytics needs analysis.

- Big data solution architecture.

- An outline of the optimal big data solution technology stack.

- Recommendations on big data quality management and big data security.

- Big data databases admin training.

- Proof of concept (for complex projects).

Big data database implementation

Our team takes on:

- Big data storage and processing needs analysis

- Big data solution architecture.

- Big data database integration (integration with big data source systems, a data lake, DWH, ML software, big data analysis and reporting software, etc.).

- Big data governance procedures setup (big data quality, security, etc.)

- Admin and user training.

- Big data database support (if required).

ScienceSoft as a Big Data Consulting Partner

ScienceSoft's team proved their mastery in a vast range of big data technologies we required: Hadoop Distributed File System, Hadoop MapReduce, Apache Hive, Apache Ambari, Apache Oozie, Apache Spark, Apache ZooKeeper are just a couple of names.

ScienceSoft's team also showed themselves great consultants. Special thanks for supporting us during the transition period. Whenever a question arose, we got it answered almost instantly.

Kaiyang Liang Ph.D., Professor, Miami Dade College

About ScienceSoft

ScienceSoft is a global IT consulting and IT service provider headquartered in McKinney, TX, US. Since 2013, we offer a full range of big data services to help companies select suitable big data software, integrate it into the existing big data environment, and support big data analytics workflows. Being ISO 9001 and ISO 27001-certified, we rely on a mature quality management system and guarantee cooperation with us does not pose any risks to our clients’ data security.